6 minutes reading time

Artificial intelligence (AI) has emerged as a slow-burn megatrend. The key shift in recent months has been the market’s awakening to the technology’s potential, and it is arguably this that the recent robust performance of AI enterprises can be attributed to. But such potential come with risks.

There is no shortage of articles or opinion pieces on AI and how it will change the world. These range from “AI will solve the climate crisis”1 to “AI poses an existential threat to humanity”2. The reality is that it is too early to predict the lasting impact of AI. AI will profoundly impact some industries and professions, and not at all others.

This article shines a light on AI from an ethical investor’s perspective and concludes that, like all tools, the impact of AI on society for good or evil largely depends on how it is used and the safeguards put in place to control that use.

Black hat or white?

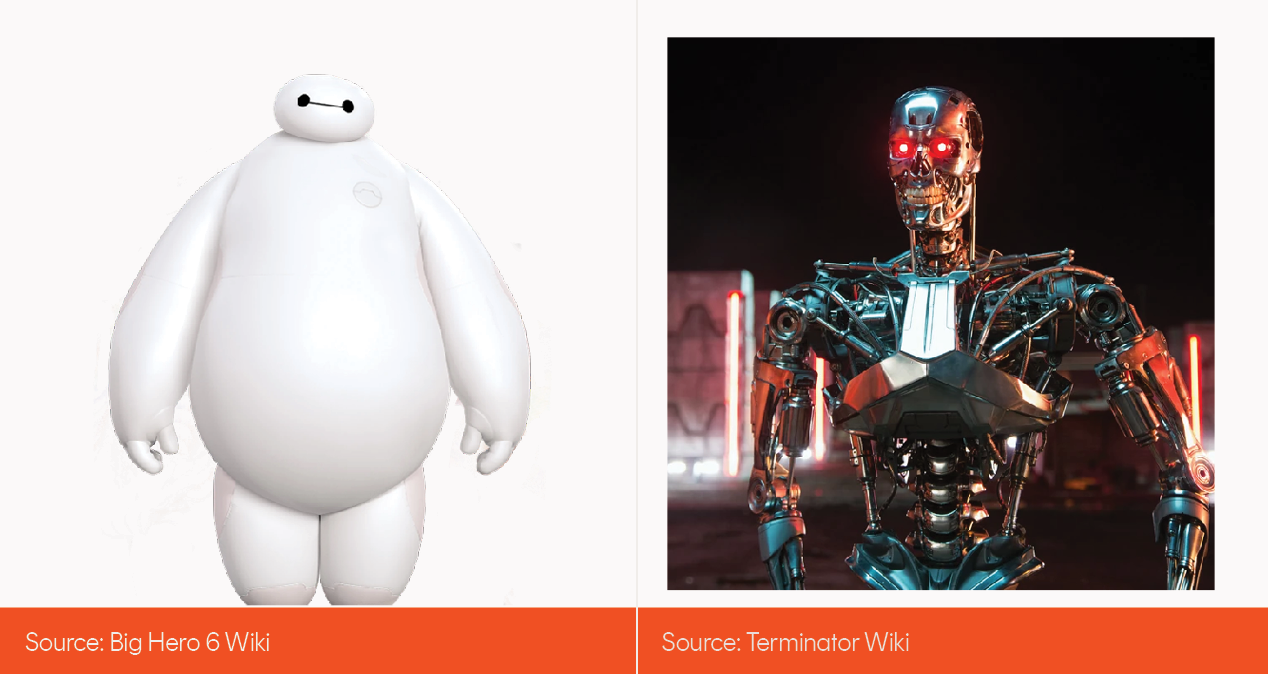

We tend to anthropomorphise technology, and AI is no different. People see AI as a devoted servant that caters to our every whim; a Betamax from Big Hero 6 or Tony Stark’s JARVIS from Ironman. The alternative view is that of the T-800 from Terminator, created by an out-of-control AI to annihilate humanity. Of course, as is often the case, the truth is more complex.

The good

We are just starting to conceive the contribution AI can and will make to medicine, education, energy efficiency, transport, weather prediction, climate modelling and agricultural production. AI will be responsible for new forms of art and expression; it will assist in the treatment of mental health, improve market efficiency, and seek to enhance equity in the legal justice system. The list goes on.

Recently Tesla announced the completion of 200 million miles of Full Self-Driving testing using AI systems3. In May 2023 biotech company Evaxion announced successful trials in AI-designed vaccines tailored specifically to an individual’s DNA4. Startup Jua.AI has developed weather forecasting models that significantly outperform traditional models, providing materially longer advanced warnings for extreme weather events5.

AI wears a ‘white hat’ comfortably.

The bad

AI will destroy many jobs.

The primary use of AI will be to enable companies to generate productivity gains, something which is driving much of the investor interest in AI. AI will impact professions so far untouched by automation. A Goldman Sachs study found that AI systems could affect 300 million full-time jobs globally6, but this is just a semi-educated guess. It is still too early to know the full impact of AI on the global job market.

The professions most at risk from generative AI include software developers (coding and computer programming), media roles (advertising, technical writing, journalism, graphic design), legal (paralegals, legal assistants, legal administration), finance (loan processing, insurance claims assessment, analysis, trading, advice, accounting, and administration), and teaching.

Already this year, large staff cuts at Alphabet7 and IBM8 have been attributed in part to AI. BT Group (formerly British Telecom) announced it would shed 55,000 jobs by 2030, of which 10,000 job losses were directly attributed to the use of AI9. In February this year, the share price of online educational platform Chegg fell 50% after it announced the impacts of ChatGPT on the earnings of its core business10.

AI makes mistakes. Many probably laughed at the story of Steven Schwartz, a New York personal-injury lawyer who used ChatGPT to help him prepare a court filing. The AI chatbot created a motion filled with made-up cases, rulings and quotes, claiming that the “cases I provided are real and can be found in reputable legal databases”11.

While the consequences of AI’s mistake in this instance were limited to considerable embarrassment for one individual, sometimes they are more dire. Ngozi Okidegbe, a Professor of Law at Boston University, has studied the use of AI in the US justice system, finding evidence that AI systems replicate and amplify bias. Systems used by US police departments, attorneys, and courts to profile offenders have been found to systematically overestimate the risk of black prisoners reoffending, impacting decisions to prosecute, as well as bail and parole outcomes12.

The AIAAIC Repository, started in June 2019, has evolved into a register that collects, dissects, examines, and discloses a broad range of incidents and issues posed by AI, algorithmic, and automation systems. From racist soap dispensers to deepfake impersonation scams, the register is a long list of the ways in which AI used negligently, or with malice, can have negative consequences.

The ugly

AI applications ‘learn’ from internet content. That means that AI bots can perpetuate biases and negativities present on the internet. Unfortunately, the World Wide Web is an unpleasant place, and is full of horrific content. To build a tool to enable the program to recognise racism, sexual abuse, hate speech and violence, the developers of ChatGPT employed ‘data labelers’ in Kenya to identify harmful content. Those workers were paid less than US$2 an hour13 and were responsible for labelling the worst the internet has to offer. Some of those workers reported being traumatised by the horrific content to which they were exposed14.

On the dark web, criminal organisations are already well versed in techniques to bypass safety protocols built into ChatGPT and other generative AI applications. In threat research published by CyberArk Labs, analysts detail how to create polymorphic malware using ChatGPT which could potentially create a new generation of virtually undetectable ransomware and data theft tools15.

An existential threat

On 30 May this year, the Centre for AI Safety released a one sentence statement:

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

A long list of prominent researchers and industry leaders signed the statement. The concern behind the statement is that AI could become so powerful it creates societal-scale disruptions if nothing is done to control its use. If artificial general intelligence (AGI) surpasses human intelligence, the impacts could be profound. The concept of malware that can easily outthink human efforts to contain it hacking critical systems may become real. A few years ago, the idea that we could be so manipulated by AI as to fall in love with a robot16, change how we vote17 or even commit suicide18, would have been fanciful. Yet those things have all happened.

Sam Altman, co-founder of OpenAI, recently published a report calling for greater regulation of AI development. He called for the development of an AI equivalent of the International Atomic Energy Agency that can inspect systems, require audits, test for compliance with safety standards, and place restrictions on degrees of deployment and levels of security19.

AI will not save the world. AI-driven climate change models will not solve climate change because our lack of action on climate change has little to do with the accuracy of models and everything to do with policy inertia and vested interests . But AI will create solutions across a range of fields and applications. It will potentially generate enormous wealth for those at the forefront of its development and implementation. But AI needs guardrails to safeguard its development, and ethical investors need to be aware of how companies are using AI, and the risks it poses.

1. https://www.bcg.com/publications/2022/how-ai-can-help-climate-change

2. https://www.nytimes.com/2023/05/30/technology/ai-threat-warning.html

3. https://cleantechnica.com/2023/06/03/tesla-will-have-its-chatgpt-moment-with-full-self-driving-cars-says-elon-musk/

4. https://sciencebusiness.technewslit.com/?p=44832

5. https://www.jua.ai/

6. https://www.businessinsider.com/generative-ai-chatpgt-300-million-full-time-jobs-goldman-sachs-2023-3

7. https://www.reuters.com/business/google-parent-lay-off-12000-workers-memo-2023-01-20/

8. https://www.bloomberg.com/news/articles/2023-05-01/ibm-to-pause-hiring-for-back-office-jobs-that-ai-could-kill

9. https://www.theguardian.com/business/2023/may/18/bt-cut-jobs-telecoms-group-workforce

10. https://investor.chegg.com/Press-Releases/press-release-details/2023/Chegg-Announces-First-Quarter-2023-Earnings/default.aspx

11. https://www.economist.com/business/2023/06/06/generative-ai-could-radically-alter-the-practice-of-law

12. https://www.bu.edu/articles/2023/do-algorithms-reduce-bias-in-criminal-justice/

13. https://time.com/6247678/openai-chatgpt-kenya-workers/

14. https://www.businessinsider.com/openai-kenyan-contract-workers-label-toxic-content-chatgpt-training-report-2023-1

15. https://www.cyberark.com/resources/threat-research-blog/chatting-our-way-into-creating-a-polymorphic-malware

16. https://www.abc.net.au/news/science/2023-03-01/replika-users-fell-in-love-with-their-ai-chatbot-companion/102028196

17. https://www.salon.com/2023/06/04/harvard-professor-warns-heres-how-ai-could-take-over–and-undermine-democracy_partner/

18. https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

19. https://openai.com/blog/governance-of-superintelligence